Plugstupid

But they managed to mess up in awful ways:

- plugs don’t have a pushbutton, so turning a plug back on requires going to the controlling PC

- the system comes with a CD, which then insists on an internet connection and installs over the net

- you have to register with a code printed on that same CD (not the box, the actual disk)

- you must enter personal info, at least an email address anyway

- by default, a checkbox is set which grants permission to PW to obtain your power usage data

- and then... you’re told that you’ll get an email unlocking the “source” application

- (calling an application “source”, when it is anything but, is somewhat confusing for IT people)

- without that activation, the software barfs and exits

I’m not amused. This is hardware after all, so software protection all the way to obtaining my email address and insisting on internet connectivity and over-the-net activation is totally over the top, if you ask me. I’m happy to have these plugs now to monitor exact power consumption around the house and I sure intend to use them fully - but this approach is stupid.

These plugs made me wiser indeed, but probably not quite in the way the manufacturer intended...

Update: just got the confirmation email. Ok, so it took 15 minutes... my point is that this shouldn’t have been required in the first place.

Anomaly

Glitch

Weird.

Thanks to everyone who reported this - I’ve been out of the country (and offline) for a few days, hence the delay in fixing this.

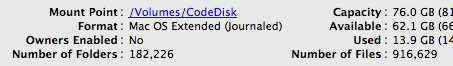

Netbook

On the left: 1.6 GHz Atom, 512 Mb RAM, 8 Gb SSD, 1024x600.

On the right: 25 MHz 486SX, 10 Mb RAM, 200 Mb HD, 640x480.

Here’s another size comparison, with a 17” MacBook Pro (Core 2 Duo) this time:

Mac: cost 10x, CPU speed 4x. Tosh & Mac both weigh 3x the A110L netbook.

Kenya

Kofi Annan and Barack Obama - I am extremely impressed by what Africa has to offer.

The JavaScript race

SquirrelFish Extreme: 943.3 ms, V8: 1280.6 ms, TraceMonkey: 1464.6 ms

Given the fact that JS is part of just about every web browser by now, it looks like this modern dynamic, ehm... scripting language is becoming seriously mainstream.

About Lua

More seriously, I really subscribe to the idea that "if the only tool you have is a hammer, you treat everything like a nail". So, programmers should learn several languages and learn how to use the strengths of each one effectively. It is no use to learn several languages if you do not respect their differences.

That last sentence says it all. It implies learning several languages well - not just skimming them to pick on some perceived flaw. All major programming languages are trade-offs, and more often than not incredibly well thought-out. To put it differently: if you can’t find an aspect of language X at which it is substantially better than what you’re using most of the time, then you haven’t understood X.

Meta-productivity

It’ll be very interesting to see how the 43f website evolves, given this new insight.

Shelved

Life ≠ prices

Just spent a few days in Berlin. Lots of glitz, history, art, and architecture, of course. I was deeply shaken by the memorial - not in the least because IMO it could all happen again, to anyone, anywhere, anytime. It’s encouraging to experience such a well-balanced statement, specifically in the re-united Berlin and in this day and age.

The reason I mention Berlin is that a few days of wandering around made me realize that life as a tourist would be a lot more fun if not every single sip, bite, and step were tagged with a price, i.e. lots of stupid distractions.

Speaking of which ... next week we’ll be strolling in Paris, my home town when I was 7 and 8. It’ll be all about delight.

Green consumerism

What I don’t see, is information about the level of energy / raw material waste required for the production of goods. It’ a bit like adding insult to injury: first we become responsible for a hugely wasteful production and transportation process by buying some flashy new X, and then we become doubly wasteful by discarding the product well before it has ceased being useful.

I just don’t get it. We drive a Volkswagen Golf which is definitely not the best in terms of gasoline economy, but I wouldn’t dream of getting rid of it after 7 years of fantastic, enjoyable service. It’s fast, it’s gorgeous, it’s luxurious. Yet I think we qualify as having a respectably low “carbon footprint” - to use that phrase-du-jour. How? By driving less. How about technology in the house, then? That too: we turn stuff off. Genius, eh?

There used to be such great solutions ages ago. Such as power-cords which can be unplugged, and switches embedded in the power cord, before the power bricks. Remotes to control stuff are wonderful. But to turn stuff on and off? C’mon, get a life. Or better yet - get up and, ehm ... walk?

I can’t stop recommending this 11-minute TED presentation by Chris Jordan.

And to get back to computers: if you’re looking for an always-on server, have a look at Bubba. It’s a full Linux setup, with all the extensibility of Debian built in, right out of the box (the manual includes info on how to get SSH access, it’s not some hidden-on-reflash feature). I’m using a similar, but less streamlined, setup based on an NSLU2, and it does SVN, iTunes music, even handles Time Machine backups. On roughly a kilowatt per week.

Oh, and remind me to also rant about noise levels, one day :)

Identity

Web apps

Group buy

Good movie

I have a boundless respect for movies which address major issues without pointing the finger or talking in primitive “us vs. them” terms, as epitomized by the “you’re either with us or against us” phrase. On the human level, every attempt to define “us” and “them” ends up being shallow - there is only me and you. Life is not about labeling opponents, adversaries, enemies, or terrorists, but about building bridges and crossing them - one person at a time, if need be. Brothers (the movie) is an illustration of that.

Ok, now you can go back to doing whatever nerdy stuff you were doing ;)

TED at its very best

Design

Design is what has made me tick for the past decades, and will do so for decades more to come, I hope. Design has no age limits. Design is about the why, the who, and the how - asking "why" things work the way they do, "who" figured it out, and "how" they did it. Design is about beauty, of course. But design is also about social structures, when trying to understand what floats to the top of a group, a community, or a society. And design is about power and politics, when you think about which designs succeed in changing the world we live in.

Design is a lot about creativity, obviously. Design is where mind meets substance. Design is where emotions and rationality dance with each other. Like quantum physics, design is about entanglement. You can't just sit and dream, waiting for the right design to come to you. Nor slave your way through to a good design. Design is about tension, and about balance. And design doesn't fit a 9-to-5 schedule (me neither, so now I have an excuse!).

But above all, design is about the future. Those of us involved in design, are those who want to shape that future - literally of course, but also figuratively. Designers look forward. Designers want to make this world a better world. Not by forcefully changing directions of what is happening now, but by looking beyond today's horizons and describing the places we could be in tomorrow. Design is not a methodology of "push", but one of "pull".

People (and things) are the way they are today, often for overwhelmingly valid and explicable reasons. Bodies (in both senses) in motion have a way of continuing on their previously chosen paths. Design is not about today's path, but about tomorrow's choices.

FYI, I'm writing this as a reminder to myself that I should be less concerned with what is happening today and more focused on what can be tomorrow. And because it gives me the opportunity to mention that I'm immensely proud of our daughter Myra, who wants to be... a designer. I can't think of many things more fulfilling, both for her and for us, than to want to actively be part of our respective futures - and to contribute to shaping it.

Wrap it up

A particularly interesting set of extensions is jQuery UI - I really like the way you can pick and choose what you want and get a personalized package with a few mouse clicks on the download builder page.

One of the countless projects of mine which never materialized was the "Standalone Executable Assembly Line" (SEAL). I still think it would be very useful. So would a much simpler system which does this for pure Tcl scripts, btw (or Lua, Python, Ruby, whatever).

Not enough hours in the day. Nor years in a lifetime.

Darwine

The 1.0rc1 release of Wine is very easy to install (I did a full build from source, as described here, which builds a dmg), with the usual "drag the app (a folder in this case) to the Applications folder". Everything appears to be neatly tucked away in a 67 Mb app bundle. I set up MacOSX so that all files with extension ".exe" launch WineHelper. And that's it - exe files become double-clickable and they... work. Pretty amazing stuff.

Behind the scenes, Darwine launches X11 for the gui, and opens two more windows: a process list and a console log.

I tried Pat Thoyt's Tclkit 8.4 and 8.5 builds and they work - Tk and all. There is a quirk with both of them that stdout, stderr, and the prompt end up on the console, but that's about it. Apps run on drive Z: which is the Mac file system, so everything is available.

This is fascinating. Maybe the time has come to try running MSVC6 straight from MacOSX. It would be great to automate builds from a normal command-line environment, running only a few apps on Win32 (i.e. msvc6's "cl.exe") and staying with TextMate, bash, make, as usual.

I just moved my Vista VM off to a secondary disk, reclaiming over 10 Gb of disk space filled with something that taxes my patience and strains the Mac (1 Gb RAM allotted to VMware and it still crawls). Having Win98, WIn2k, and WinXP VM's is enough of a hassle: I just tried upgrading XP to SP3 and it failed because 700 Mb of free space wasn't enough. Why didn't a quick disk check before the install warn me? Actually, the real question is: why is a 4 Gb virtual disk too small to comfortably run XP?

Darwine & Wine really deserve to succeed IMO. So that I can treat Windows as a legacy OS. And get back to fun stuff.

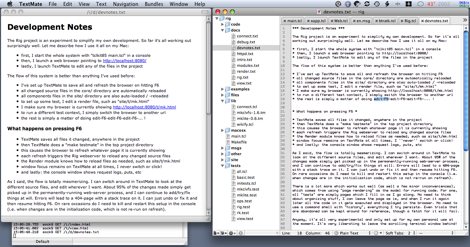

Development workflow

Click for full image - my real setup uses larger windows, filling an 1680x1050 screen.

The top-left is a Camino browser, with underneath it an iTerm window showing the stdout/stderr log. On the right is the TextMate editor. This is all MacOSX, clearly.

The text on the screen explains a bit what's going on. The reason this works so well, is that I'm developing inside a running process - something people working in Common Lisp and Smalltalk/Squeak take for granted. To work on some stuff, I add a new test page with a mix of comments and embedded Tcl calls. The central motto here is: Render == Run. Unlike interactive commands, the test page does all the steps needed to reach a certain state, and shows any output I want to see along the way. On a separate page in the browser, I can browse through all namespaces, variables, arrays, open channels, loaded packages, and more (what "hpeek" did, but far more elaborate). Apart from crashes and startup-related changes to the code, the server-based development process is never stopped. I can add calls to try out new code, and then decide after-the-fact what details to look into. Then, I just edit and hit F6 to fix or finish the code, whatever. Last but not least, one of the test pages runs a Tcl test suite, which gets added to as soon as the newest code stabilizes a bit. So again, it's a matter of keeping that page open in the browser and hitting F6.

This approach has already paid for itself many times over (the "cost" being only my time spent on it, evidently).

Silence

As I said, the result is perfect - and music is fun again, even at a very low volume.

Which makes this post hilarious ;)

Good company

See Tim Bray's weblog for more."Now that the best and the brightest have spent a decade building and debugging threading frameworks in Java and .NET, it’s increasingly starting to look like threading is a bad idea; don’t go there. I’ve personally changed my formerly-pro-threading position on this 180º since joining Sun four years ago."

Update: more good company - Donald Knuth has this to say:

and about multicore:I won’t be surprised at all if the whole multithreading idea turns out to be a flop, [...]

Read the interview for context.How many programmers do you know who are enthusiastic about these promised machines of the future? I hear almost nothing but grief from software people, [...]

Reality

Well, I don't care. The professional world we live in is so detached from the human condition by now, that I need to draw a line. Not a day goes by where I don't think about world events and the personal tragedy associated with them. The buck stops here. I no longer want to blog about "fun stuff" without exposing that other side of me.

The article that led to all this is an interview with Robert Fisk. Which made me realize that today, wars are waged by proxy - the actors are a bunch of young kids we don't know, and the witnesses tell stories we hear little about. Soldiers and reporters (oh, and some collateral damage). With our televisions and computers as the ultimate proxy.

I try to imagine the reality on the ground in a conflict zone such as the middle-east, from any perspective. And I can't really. Imagine growing up or growing old in such a context. Day to day, for years on end. The mind boggles. When I merely broke my leg a few months back, an immense safety net of family, friends, medical care, compassion, personal attention, and empathy unfolded itself. Then, in accurate lock-step with my recovery, that same safety net retreated, going on standby again just as automatically. Life is darn good here, not according to some metric, but because of the knowledge that no matter what happens, life will still eventually work out ok. It's hard to imagine two situations further apart than life over here and life over there. The horror being that much of this is due to armed, man-made, conflict. Why, with all our culture and technology, are we as a whole still living in medieval times?

The injustice on this planet is obscene.

Web apps

It's particularly interesting to see how the workflow comes together with just TextMate and Safari, there is no explicit compile or even save, it's all based on a single mechanism: Render == Run. Which, as it turns out, is exactly how I've been doing my own small-scale web app development - based on an internal package called Mavrig. This is the way to build web apps, if you ask me, all the way to the deployment approach used at the end of the above video.

Ok, so "all" I have to do now is finish Vlerq and Mavrig, to show how Tcl + Metakit technology can achieve the same effectiveness, but with an order of magnitude less complexity than Python + Django (+ SQLite in local mode, presumably).

I wonder how long it'll take for this to show up on iPhones and iPod Touches...

Nightmare scenario

The Vlerq project is about very high level concepts as well as the close-to-raw-silicon implementation. After nearly four decades of exposure to computing technology, I consider Lisp the most powerful production-grade environment available today for taking very high level concepts to a runnable form. A system such as SBCL combines everything into one system which bridges an extra-ordinary range of interactivity (through Slime and Emacs), excellent introspection and debugging, and machine-code generation, all in one. I envy the masters who know how to live inside SBCL and are able to perform magic in a world which brings together extreme abstraction and raw performance.

Problem #1 is that SBCL is not deployable, let alone embeddable in other languages (a separate process is tricky for robust deployment, and SBCL is a pretty large environment to drag along). For something as general-purpose as Vlerq, being tied to a little-used system is a big issue. Problem #2 is that I have far too little experience with both SBCL and Emacs to be truly productive with them in the next years (yes, it takes years to make app, code, and bits sing, in my experience).

Problem #1 could be addressed by generating code for another system, such as the (delightfully Scheme-like) Lua language, along with C-coded primitives for all performance-specific loops and bit-twiddling. But that means problem #2 will sting even more: now I not only have to become proficient with SBCL, I also need to implement complex code on multiple levels, so that the generated Lua + C source code flies well.

It gets worse: problem #3 is that Lua is not really rich enough yet in terms of application-level libraries. In fact, I consider Tcl to be one of the best application-level languages around (yes, above even Python and Ruby) because of its excellent malleability and the way it supports domain-specific languages. Like Lisp, Tcl melds code and data together in a very fluid way - meaning it allows you to bring the design towards the app, instead of the other way around. It's ironic that Lisp and Tcl share this property, but at totally opposite ends: Lisp on the algorithmic side and Tcl on the application glue side.

So what's the nightmare? Well, to be truly effective in such a context, I'd have to be a master in SBCL, Emacs, Lua, C, and Tcl. And I'm not. I've been feeling the pain for a decade now. And I just don't know whether I should aim for such a context, or just muddle along in one or two technologies - with all the limitations associated with them.

I'd love to be proven wrong, but those who say "use language X" probably don't understand what breadth of conceptual / performance gap I'm trying to bridge. In a way that works outside a laboratory setting, that is.

Refutation

Imagine taking this one step further and turning it into a mechanism to improve blog / wiki / forum discussions: people can tag/vote on each comment to qualify it as one of the DH1..DH6 categories. A rule could be that each person gets associated with say the average voting result, and that a person cannot tag any other comment above his/her own "level" (or perhaps a single step above). The reasoning being that only people considered able to argue on a high level would be allowed to tag other comments as being on a similar level.

With such a system, one could then cut-off low-class entries when going through a lengthy discussion thread. Quoting one of the last paragraphs in the article:

I think it would make discussions far more interesting, particularly controversial ones...But the greatest benefit of disagreeing well is not just that it will make conversations better, but that it will make the people who have them happier. If you study conversations, you find there is a lot more meanness down in DH1 than up in DH6. You don't have to be mean when you have a real point to make. In fact, you don't want to. If you have something real to say, being mean just gets in the way

How we make decisions

LuaVlerq 1.7.0

This is Lua-only, since Lua is now tightly integrated into Vlerq (or is the other way around?). The intention is to later add thin language wrappers for additional language bindings.

I'm pleased with the 1.7.0 code. Despite its immature status, it's small and its snappy - both are essential starting conditions for me.

On track

I'm very pleased with v7 because it includes (early) code for supporting missing values and mutable/translucent view layers. This is where the v4 design had reached its limits. And the whole code base is only around 5K lines of C plus 200 lines of Lua. Several design choices, particularly the main internal data structures, have stood the test of time and have survived virtually as is in all the last rewrites. With a fair amount of functionality implemented, this indicates that the core design is sound (even if some code is still pretty ugly).

Onwards!

Site changes

Weight (or: Vlerq progress)

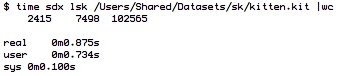

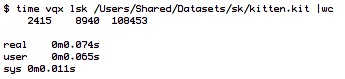

Here's the same with vqx, which I'm using to try things out in Vlerq these days:

Tclkit is 2.8 Mb, with Tcl/Tk and Metakit inside, and has to open/launch the sdx starkit. Vqx is a 0.2 Mb standalone app with Lua and Vlerq embedded - it implements lsk as follows:

In all fairness, there are many years of experimentation and learning between the two - the above code is considerably streamlined over what I did in sdx. As is Vlerq w.r.t. Metakit.

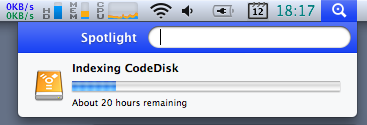

Too heavy for Spotlight?

RAID + backup

Let me explain. I've been moving more and more information to disk lately, scanning in books and de-duplicating all cdrom/dvd backups (we had all our vinyl and negatives digitized a while back). Takes only 250 Gb so far, not counting mp3's/m4v's that is (which have less uniqueness value to me). See this NYT article - where Brewster Kahle summarizes it well: "Paper is no longer the master copy; the digital version is".

But with bits, particularly if some of 'em are in compressed files, data integrity is a huge issue. Which is where RAID makes sense. So I got two big SATA disks, hooked them up to trusty old "teevie", a 6 year old beige box stashed away well out of sight and hearing. With RAID 1 mirroring, everything gets written to both disks, so if either one fails: no sweat.

But RAID does not guard against fire or theft or "rm -r". It's a redundancy solution, not a backup mechanism. I want to keep an extra copy around somewhere else, just in case. Remote storage is still more expensive than yet another disk, and then you need encryption to prevent unauthorized access. Hmm, I prefer simple, as in: avoid adding complexity.

So now I'm setting up RAID to mirror over three disks. The idea being that you can run RAID 1 just fine in "degraded" mode as long as there are still 2 working disks in the array. Once in a while I will plug in the third disk, let the system automatically bring it in sync with the rest, and then take it out and move it off-site again.

But the story does not end here. Bringing a disc up to date is the same as adding a new disk: RAID will do a full copy, taking many hours when the disks are half a terabyte each. Which is where the "write-intent bitmap" comes in: it tracks the blocks which have not been fully synced to all disks yet. Whenever a block is known to be in sync everywhere, its bit is cleared. What this means is that I can now put all three disks in, let them do their thing, and after a while the bitmap will be all clear. Once I pull out the third disk, bits will start accumulating as changes are written to the other two. Later, when putting the third disk back on-line, the system will automatically save only the changed blocks. No need to remember any commands, start anything, just put it on-line. Quick and easy!

If a disk fails: buy a new one, replace it, done. Every few months, I'll briefly insert the third disk and then safely store it off-site again. If I were to ever mess up really badly (e.g. "rm -r"), I can revert via the third one: mark the two main disks as failed, and put the third one in for recovery to its older version.

Methinks it's the perfect setup for my needs. Total cost under €300 for the disks plus cheapo drive bays. Welcome to the digitalization decade.

Sculpting

That's an elegant and accurate way of describing the software design process. And as the author of "Hackers and Painters", he ought to know.The Platonic form of Lisp is somewhere inside the block of marble. All we have to do is chip away till we get at it.

Jitters

Now there's a road map for LuaJIT 2.x, and it promises to become considerably better still. Wow.

I'm very happy to use Lua inside Vlerq now. Performance matters a lot across the scripting <-> C boundary, because one of the things you want to do is perform brute-force selection using custom conditional code, and being able to use a scripting language for those conditions is tremendously useful. I can now think about taking a condition (even from Tcl, just parse and translate to Lua), and using it in C-based brute-force loops. With LuaJIT, that gets compiled to machine code, and since the loops are usually very simple and totally repetitive, chances are that LuaJIT can figure out static datatype-info right on.

Just in case you think I've now joined the church of Lua, and abandoned all others: not so. All I do is use Lua as internal scripting language. At this stage it is also my test environment, to avoid complexity, but once the Vlerq core is sufficiently complete I intend to embed the whole kaboodle into whatever language makes sense. IOW, Lua is my systems scripting language.

i'm soooo tired of "faith-based" language communities. Lua, Python, Ruby, Tcl, whatever. All everyone seems to be doing is defending/protecting their own turf. So many missed opportunities, so little synergy. What an utter waste of human resources.

Personal details

Trouble is - all that data just keeps on getting collected. The big problem is that it's not just about what people do with that data today, but what someone might decide to do with it in the future. Where is all that data going? How come it's getting into all those laptops and CDs in the first place?

There is no need to delete anything, now that storage is so cheap (except perhaps in the White House, or while circumventing it?).

We're setting ourselves up to pay dearly one day...

OOPs

It's Déja Vu all over again... the same discussion has been raging for years in the Tcl community. Both languages are more general than just OOP. And both "suffer" from a perceived lack of it as a consequence.

In my opinion, OOP is not all it's cranked up to be. When you're creating new DSL layers or doing the kind of meta-programming Lisp is famous for, then forcing everything into OO turns it into a straight-jacket - limiting ways in which to think about abstraction. Or to put it differently: yes, OO is great to model real-world objects, but that doesn't carry over to DSL design and some forms of abstraction/decomposition.

(full disclosure: I've been going through SICP again recently, and the meta-circular evaluator and Y-combinator - fantastic stuff)

Stay hungry

Unfortunately for me, this does not imply the inverse: spending 10 years on something does not guarantee that anything even remotely useful comes out ... but still, if this is true it's quite an amazing example of focus and perseverance!“Do you guys know Fermat’s Last Theorem?”

[...]“Everyone here knows that is it is Prof. Andrew Wiles who spent about 10 years to prove it. The final proof from him came in publication in 1994”

“It is correct. However, do you guys know how Prof. Andrew Wiles found these 10 years to dedicate himself to the Great Fermat theorem?”, he signed, “Prof. Andrew Wiles told me by himself, in order to focus on the proof of the Fermat’s Last Theorem, there was one year in which he worked extremely hard to write 20 papers and locked them up in his desk drawer. Then he would pick up two to publish each year. In this way, he gained precious ten years to allow himself to do nothing else except Fermat’s Last Theorem”

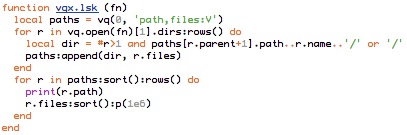

New?

See http://www.wettershop.de/ under Designprodukte/Thermometer. It sticks on the outside of a window, BTW.

Images

Last year, it was an incredible journey through India by Danielle & Olivier Föllmi (ISBN 9782732431079). This year it is the sequel to "La Terre vu du Ciel" by Yann Arthus-Bertrand (ISBN 9782732436661). The publisher's site prevents deep-linking, unfortunately.

For images and prints from these and several other photographers see this site.

For me, it is a truly incredible way to start each day...

year = year + 1

Here is my personal list of favorite films, in case you're interested.